LiteReality

LiteReality

Graphics-Ready 3D Scene Reconstruction from RGB-D Scans

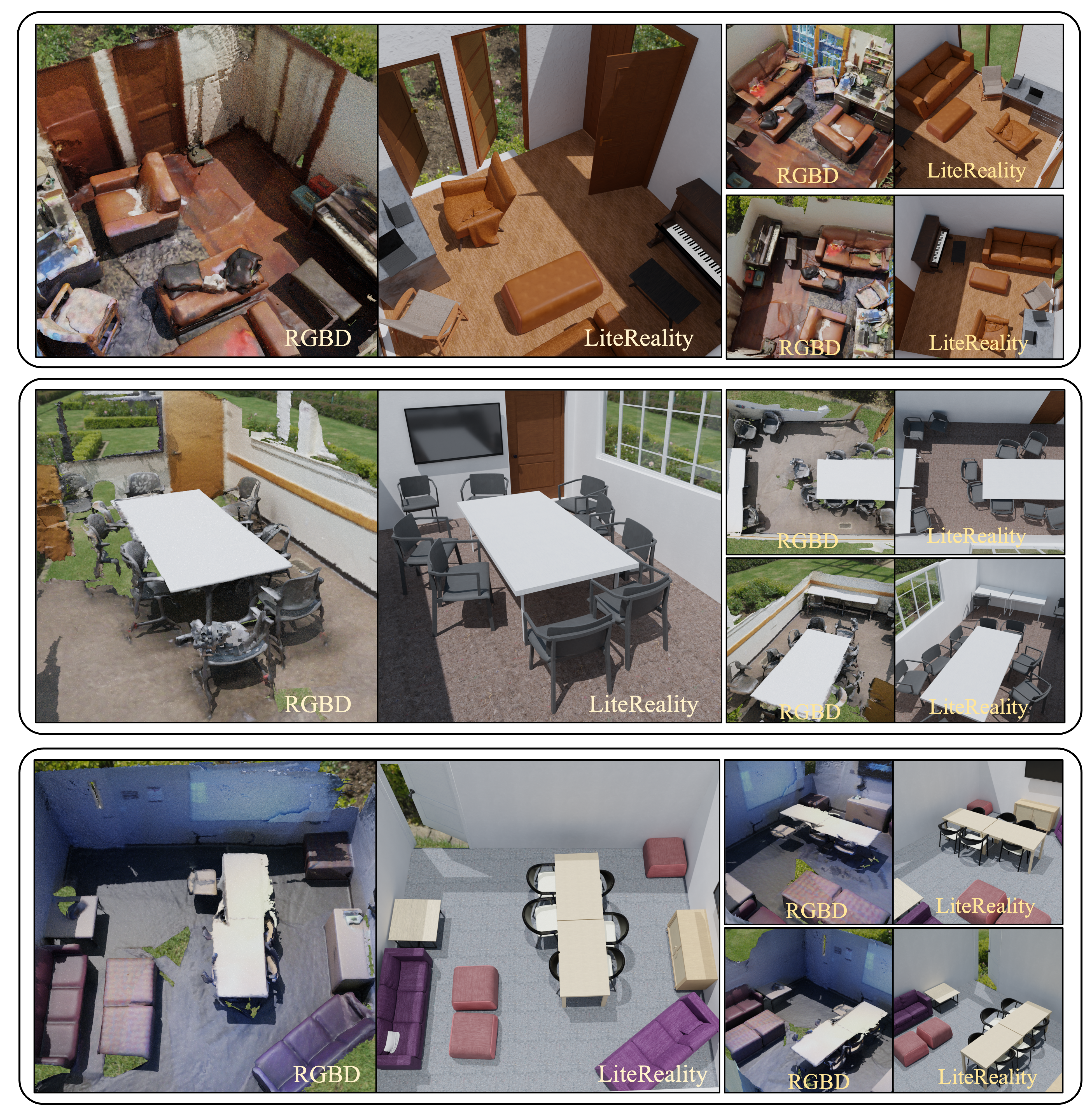

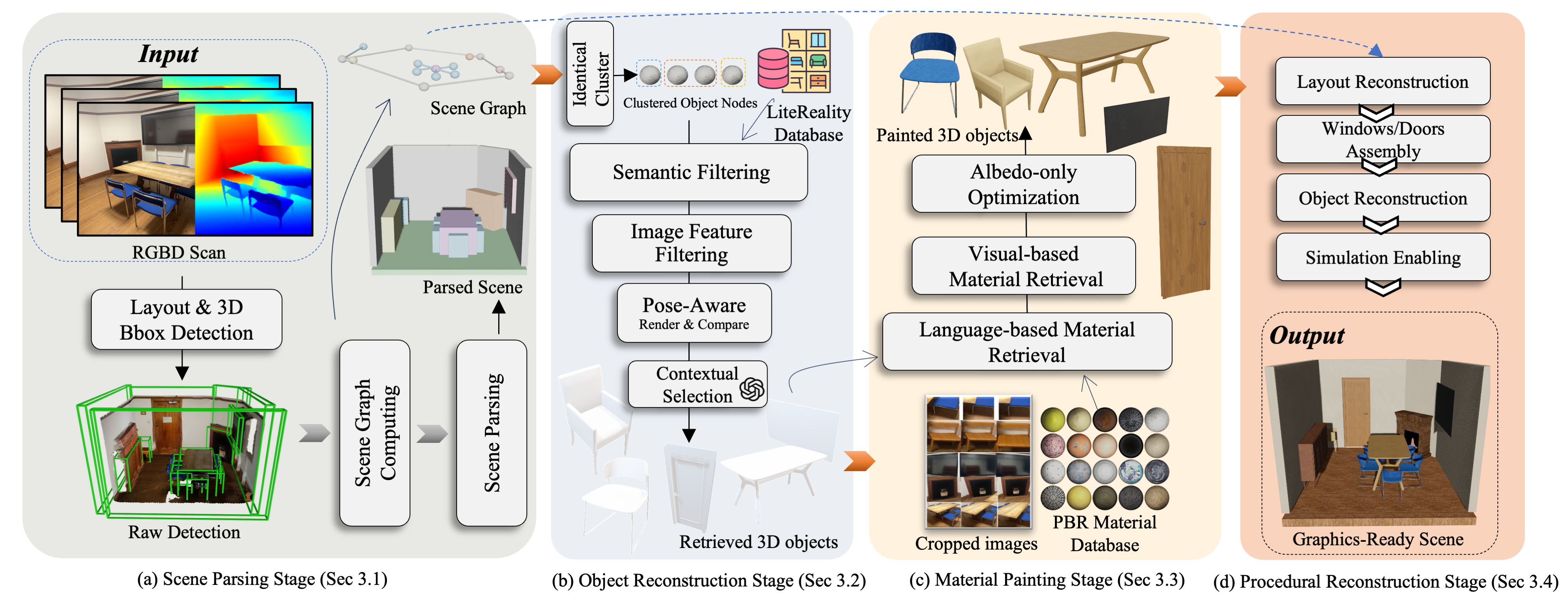

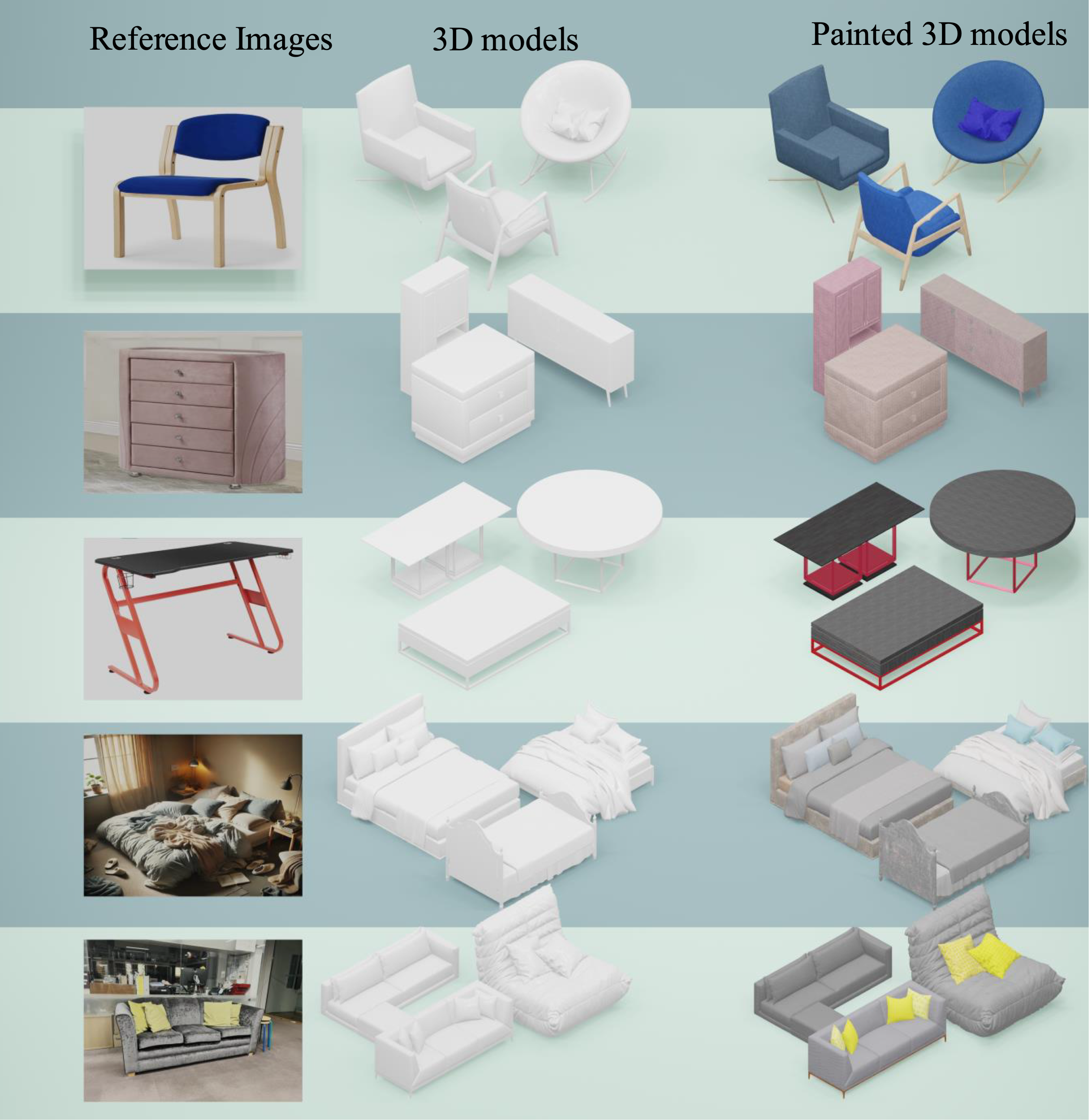

We are excited to present LiteReality ✨, an automatic pipeline that converts RGB-D scans of indoor environments into graphics-ready 🏠 scenes. In these scenes, all objects are represented as high-quality meshes with PBR materials 🎨 that match their real-world appearance. The scenes also include articulated objects 🔧 and are ready to integrate into graphics pipelines for rendering 💡 and physics-based interactions 🕹️.